Seamless Integration of Translation Services in Machine Learning

03

Seamless Integration of Translation Services in Machine Learning

Imagine you’re on a journey where you have a magical tool that can instantly translate any language into another, making communication seamless and effortless. This is not just a dream but a reality facilitated by the advancements in artificial intelligence (AI) and machine learning (ML). Today, we’re going to explore how to seamlessly integrate translation services into your ML projects, making your applications smarter and more accessible.

The Evolution of AI and Its Impact

Artificial intelligence has come a long way since its inception in the 1950s, with foundational contributions from pioneers like Alan Turing. Today, AI encompasses a wide range of technologies and applications, including machine learning (ML), deep learning, natural language processing (NLP), computer vision, and generative AI. These technologies are used to solve complex problems, enhance business efficiency, automate processes, and make smarter decisions.

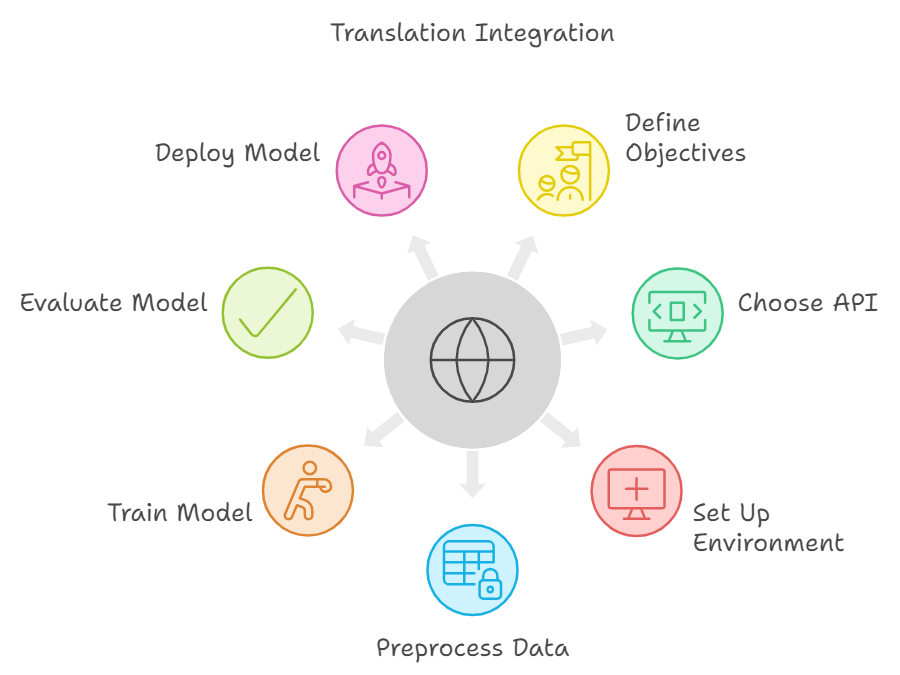

Step-by-Step Guide to Integrating Translation in ML Projects

Integrating translation services into your ML projects can seem daunting at first, but with a clear roadmap, it becomes as straightforward as following a recipe. Let’s break it down step-by-step:

- Define Your Objectives

- Identify the languages you need to support.

- Determine the scope of translation (e.g., text, speech, images).

- Choose the Right Translation API

- Evaluate APIs like Google Translate, Microsoft Translator, and Amazon Translate.

- Consider factors such as accuracy, speed, and cost.

- Set Up Your Environment

- Ensure you have a robust ML framework (e.g., TensorFlow, PyTorch).

- Integrate the chosen translation API into your development environment.

- Preprocess Your Data

- Clean and format your data for consistency.

- Use techniques like tokenization and normalization for text data.

- Train Your Model

- Incorporate the translation API to handle multilingual data.

- Use techniques like transfer learning if you have pre-trained models.

- Evaluate and Fine-Tune

- Test your model with different languages to ensure accuracy.

- Fine-tune parameters based on performance metrics.

- Deploy and Monitor

- Deploy your model in a real-world environment.

- Continuously monitor and update the model based on feedback.

The Importance of Data Quality and Governance

The success of AI systems heavily depends on the quality and diversity of training data. High-quality data ensures accurate, unbiased, and reliable AI outcomes, while poor data quality can lead to biased models and inaccurate predictions. Data governance frameworks are crucial for managing data quality, ensuring privacy, and maintaining compliance with ethical standards. Organizations like AWS provide tools for data collection, annotation, and evaluation to support AI development.

Synthetic Data: A Game Changer

In cases where real data is scarce or sensitive, synthetic data offers a viable solution. Synthetic data, generated by AI to mimic real-world data, provides:

- Unlimited data generation

- Privacy protection

- Bias reduction

Techniques like Generative Adversarial Networks (GANs) and Variational Autoencoders (VAEs) are employed to create synthetic data, which helps in training robust AI models without compromising privacy.

Overcoming Challenges in AI Training

Training AI models involves several challenges, including data imbalance, algorithm selection, computational resources, talent acquisition, and project management. Here are some solutions:

- Technical Solutions:

- Data augmentation

- Regularization

- Transfer learning

- Organizational Strategies:

- Fostering clear communication

- Collaboration

- Continuous learning

Ethical and Regulatory Considerations

Ethical AI development is paramount, focusing on fairness, transparency, and accountability. Initiatives like the Shanghai Declaration and the Bletchley Declaration emphasize global cooperation, data protection, and governance mechanisms to ensure responsible AI use. The U.S. Department of Defense’s AI adoption strategy and DARPA’s AI Next campaign highlight the importance of ethical AI in defense and public sector applications.

AI in Industry and Research

AI is revolutionizing various industries, including construction, finance, retail, and healthcare. It enhances productivity, safety, and decision-making by leveraging big data and cloud computing. High-quality training data is essential for AI to identify patterns and make accurate predictions, driving innovation and efficiency.

Diversity and Bias Mitigation

Ensuring diversity in AI training data is critical to preventing biases and achieving fair AI systems. Diverse data sources help mitigate risks associated with biased models, which can lead to unfair outcomes. Strategies to enhance data diversity include:

- Breaking down data silos

- Transforming unstructured data

- Collaborating with partners

- Acquiring third-party data

- Creating synthetic data

Future Directions

The future of AI model training involves ongoing advancements in techniques and the potential of transfer learning to improve model efficiency. Human oversight remains crucial in curating data and refining models. Companies like Oracle and platforms like Shaip and Scale AI provide comprehensive solutions to support the AI lifecycle, from data collection to model evaluation, ensuring high standards of quality and ethical considerations.

Latest Words

In summary, AI’s development and application span a wide range of technologies and industries, driven by high-quality, diverse data and robust governance frameworks. Ethical considerations and continuous innovation are essential for realizing AI’s potential while mitigating risks associated with biases and data quality. Integrating translation services into ML projects can unlock new possibilities, making your applications truly global and inclusive.

Before we wrap up, here’s a quick quiz for you to test your understanding:

- What are the key steps in integrating translation services into ML projects?

- How does synthetic data benefit AI model training?

- Name three strategies to enhance data diversity in AI training.

Feel free to leave your answers in the comments below!